Curation under Constraint

Is it possible to develop a curation protocol for web3 that doesn't repeat the errors of Web 2.0 platforms?

A glitch is an unexpected moment in a system that, by catching us off guard, reveals aspects of that system that might otherwise have gone unnoticed. At its best, a glitch is an epiphany. Is revelatory surprise possible in the black boxes of popular contemporary AI systems? Below I describe several experiments exploring the possibilities of glitching artificial neural networks, in order to better understand the logics and politics governing our digital lives. As with most of my glitch thoughts, I developed these in conversation with other artists at https://not.gli.tc/h in Chicago and on the Artificial Unintelligence Discord server.

Artificial neural networks take some arbitrary data as input and return some output that corresponds to it. On the inside, they are multidimensional grids, with billions of parameters connected across multiple layers. A raw neural network is like a multidimensional Plinko board. At first, when you drop a disc in the top (the input data), there’s no telling in which of the board’s slots (outputs) it will land. But with enough data, we can slowly adjust all the pegs in our Plinko board so that the right discs always end up in the right slots. After we’ve finished adjusting the size and shape of the board’s pegs, the newly “trained” Plinko board could be called a “model,” capable of making accurate predictions about every disc dropped in henceforth.

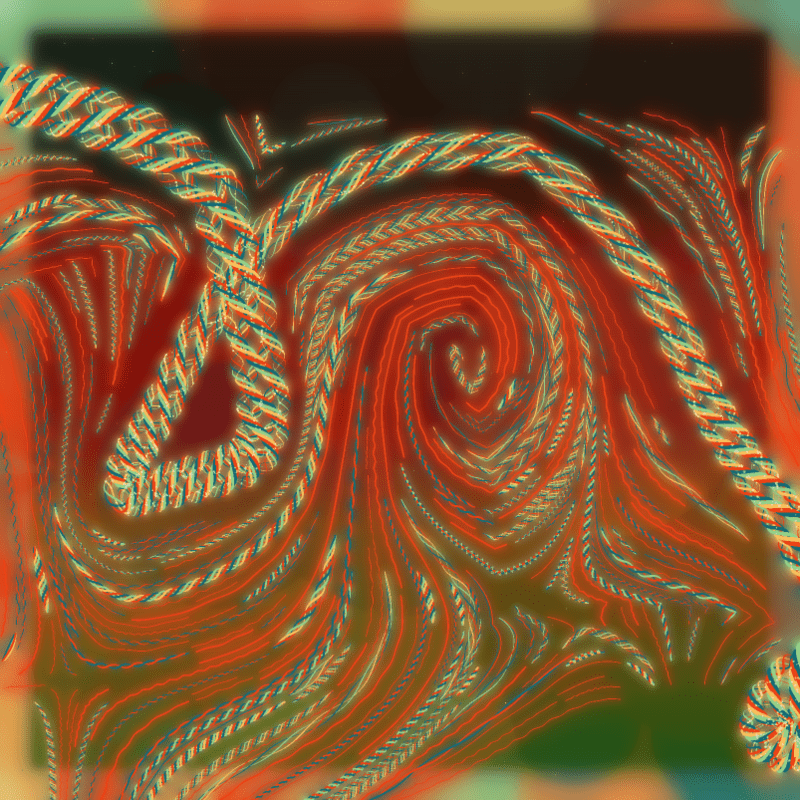

Today there are models that can drive cars, predict the future, and create art. The most popular neural networks for the latter purpose are called diffusion models; they can synthesize images from any text input. I repeatedly submitted the prompt “glitch art” to OpenAI’s Dall-E. Many glitch artists would not consider the outputs glitches in the purest sense, but rather what designer and writer Shay Moradi has called “glitch-alikes,” images with glitch aesthetics created by some other means. My “glitch art”–prompted images were not produced by corrupting the data, short-circuiting the neural network, or otherwise misusing an AI model. The model is operating exactly as intended.

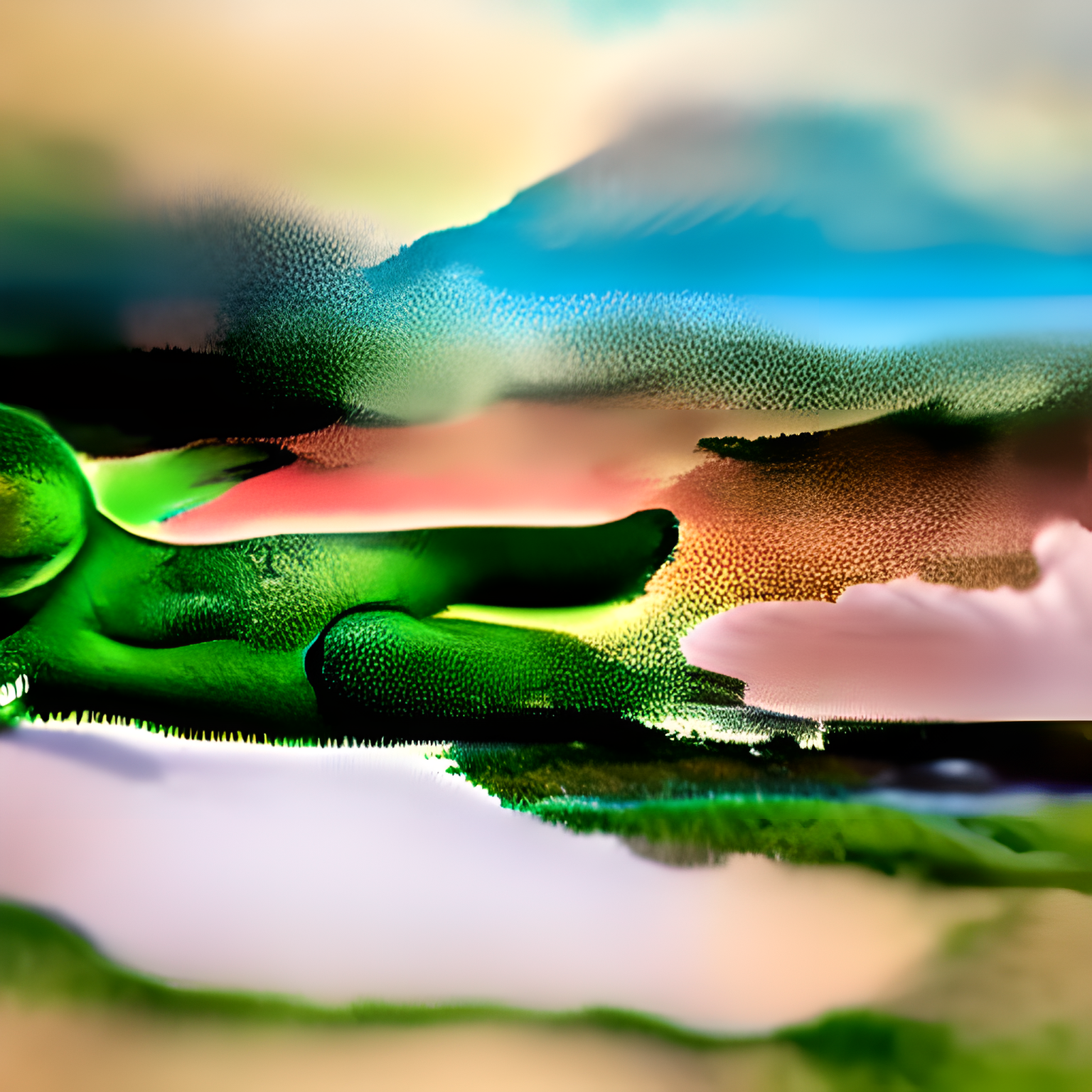

The new AI models create fairly convincing glitch-alikes—images with glitch aesthetics created by some other means.

Apps designed to produce glitch-alikes have been around for more than a decade. Search the app stores today and you’ll find GlitchCam, Glitch Art Studio, and ImageGlitcher, among others that apply various filters to re-create the effects of data corruption. Just as early Instagram filters imitated the look of analog photography, these glitch apps simulate the breakdown of digital media. But most of these produce images that wouldn’t fool an experienced glitch artist’s eye.

The new AI models, however, create fairly convincing glitch-alikes. Some of the results I obtained from Dall-E remind me of the glitches I’d get when corrupting video compression algorithms—a process described in my Glitch Codec Tutorial—but upon closer inspection I noticed peculiar artifacts, not those associated with image compression, but rather ones unique to the diffusion process. The macro blocks produced by compression are uniform in size, but here they were not. The grid of pixels that becomes more visible when glitching images should be perfectly aligned, but these were structured slightly differently. In many of these prompted images, the pixels almost look as though they were painted by hand, the way Gerhard Richter would reproduce the artifacts of photography in his paintings. This is likely a reflection of the model’s bias; I’d guess its training data contains fewer glitched jpgs than it does paintings made by hand. In this way, the AI-generated glitch-alike is, like the classic rabbit-duck illusion, a kind of “ambiguous image.” Depending on how we set our focus, it can lead to insights into the workings of these new systems, which qualifies it as a “real glitch” after all.

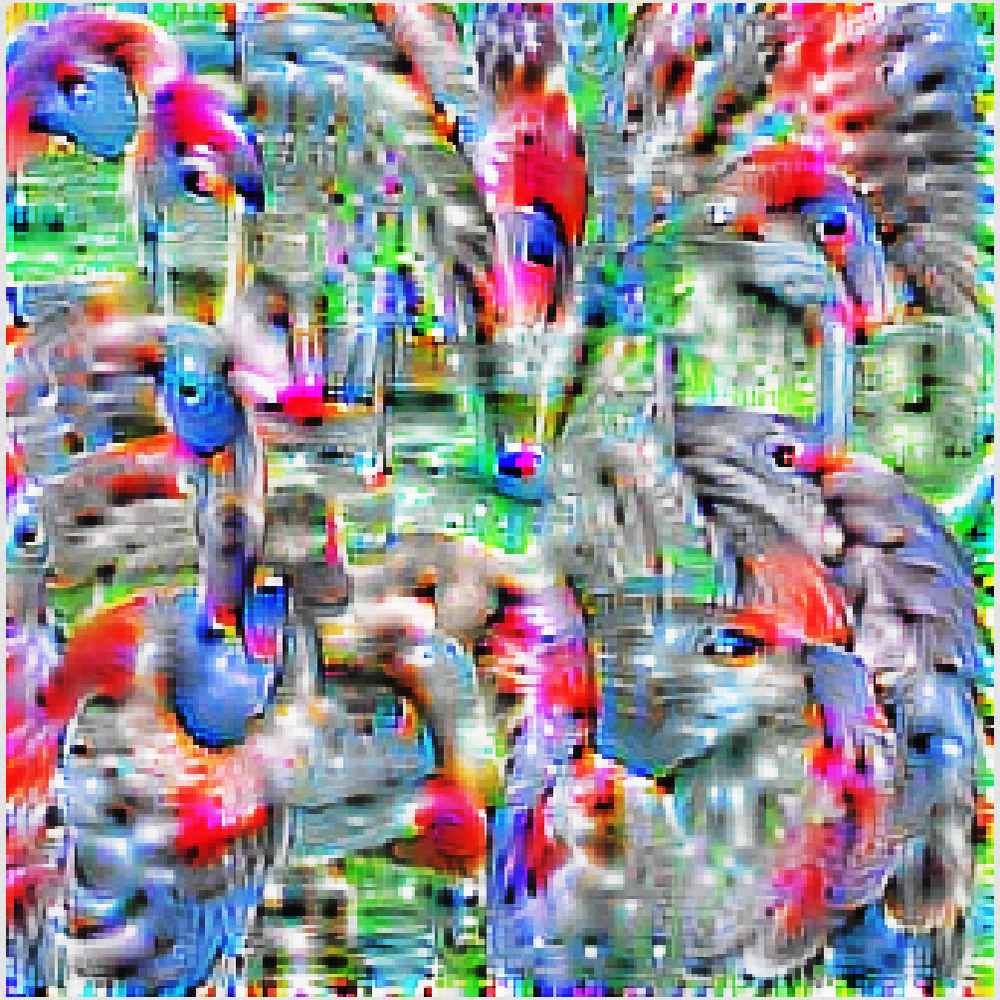

Glitch art often reveals aesthetics inherent to digital formats. A jpg of a flamingo will appear the same as a png or gif of the same flamingo, but when these files are glitched each will render entirely different visual artifacts, reflecting the distinctive algorithmic logic behind each file type. How might we reveal the algorithmic logic behind an AI? What perspective can we gain from this? What sorts of new glitchy artifacts await us? Using a piece of technology the “wrong” way has always been a core tenet of glitch art. As glitch artists, we use music software to edit images, digital imaging tools to edit audio, and text editors to edit everything but text. Applying this logic to AI, we might try to create images with a model that was trained to produce something else.

Using a piece of technology the “wrong” way has always been a core tenet of glitch art. Applying it to AI, we might try to create images with a model that was trained to produce something else.

In 2014, researchers at Google developed the deep neural network GoogLeNet to train models to classify or “predict” the contents of an image based on textures and patterns. If you show GoogLeNet an image of a flamingo, it will output confirmation that it is indeed a flamingo, with some numerical confidence score. Input an image of a platypus, and it will tell you it’s most likely a platypus. Input an image of a coffee mug, and it may tell you that it’s a dumbbell (especially if that mug is being held by a muscular human arm). When these algorithms fail, it’s incredibly difficult to debug their logic, because the values of a model’s parameters are not set by humans. Rather, they are “learned” by the machine while training its neural network on large amounts of data.

In the hopes of understanding why the models err, researchers at Google flipped GoogLeNet around. Instead of inputting image data and outputting the predicted class, they used the neural network the “wrong way,” inputting the predictions and returning an image. This was how they identified the combination of pixels that would score the highest for any given class. A researcher named Tim Sainburg re-created this study and shared his findings in a Python notebook, which I used to generate the most mathematically flamingo-like flamingo. The resulting abstract arrangement of pixels may not look like a flamingo to you, but it is in fact the purest expression of a flamingo the machine can imagine. The visual artifacts in this image are one example of what spills out of the black boxes when we crack them open. They begin to hint at the patterns this model identified once trained, revealing an otherwise invisible aspect of the system.

The majority of AI-generated images today are created using diffusion models, not hacked image classifiers like GoogLeNet. While it is technically possible to set up and train your own diffusion model from scratch, the vast majority of AI-generated images today are being produced with user-facing applications created and controlled by Midjourney and OpenAI.

When a user submits a text prompt to a diffusion model, they must typically wait for a few seconds before seeing the results. While they wait they are presented with a progress bar, but behind the scenes a much more interesting visualization is taking place. Diffusion models take their name from a key aspect of this process: each image begins with Gaussian noise, a somewhat randomized matrix of pixels. Then, through an algorithmic process like a stochastic differential equation, the Gaussian noise is “de-noised” and smoothly transformed into the image prompted by the user. One of the ways tools like Dall-E suggest how artists should operate is by deciding for us what the most optimized settings for the highest quality image should be, such as which sampling method to use (a stochastic differential equation is one of many) and how many times to run it. However, glitch artists often prefer to intentionally deoptimize an application’s settings so that it might perform poorly, such as rendering overly compressed video to invoke video artifacts.

One of the ways tools like Dall-E suggest how artists should operate is by deciding for us what the most optimized settings for the highest quality image should be.

Trained AI models are already black boxes, but these functions become even more opaque when accessed through apps. By depending on corporate interfaces, we rob ourselves of potential creative misuses of the code behind the apps. By embracing open source alternatives—like Stable Diffusion, a collaboratively trained model—we can have our app and glitch it too. Because it is open source, Stable Diffusion is freely available, and the community has created a number of variations of the model and modes for interfacing with it. Open-source, community-created graphical apps, such as Automatic1111, also provide a much greater degree of control than Midjourney or Dall-E. When you set up these apps on your own computer, you can experiment and hack them any way you like.For example, it’s recommended to run the denoising algorithm fifteen or twenty times to minimize artifacts. Through Automatic1111’s graphical interface, I used various versions of Stable Diffusion to run the de-noising process on a prompted image only once, interrupting the process before the model had a chance to finish its drawing. The result was a semi-abstract landscape, warped as if seen through a tilt-shift lens.

I don’t think it’s necessary to write your own code in Python or run a local copy of Stable Diffusion on your own machine to glitch AI. It is possible to instigate “authentic” glitches simply by prompting the system through the provided interface. The trick is to avoid prompting it directly for a glitch. What if we prompt it with gibberish text? What if we insert a prompt written in uncommon Unicode characters, emojis or l33t speak? The better we understand these systems and the process occurring behind the scenes, the more likely we might conjure prompts capable of casting glitchy spells on the model. For example, we know that diffusion models start by creating an image of colorful static known as Gaussian noise, before de-noising it a number of times to produce an image matching the prompt. So what happens if we prompt the model to make Gaussian noise?

When dealing with new and unfamiliar technology, it’s important for the glitch artist to dispel any preconceived notions of what a glitch should be.

Diffusion models are trained on an immense dataset of image/text-description pairs. These text descriptions are broken up into “tokens,” which are often small words or word fragments. For example, “graffiti,” “pizza,” “cat,” “th,” “ly,” and “ing” are all tokens found in Stable Diffusion’s vocabulary. In the case of large language models like ChatGPT, both our input and its output are broken down into and built up from a series of tokens. Because the tokens in a model’s vocabulary reflect the most common patterns of text found in its training data, it’s reasonable to expect these tokens to mirror the most commonly used words. This is why researchers inspired by the Google team were surprised to find groupings of “anomalous tokens,” like “?????-?????-” and “rawdownloadcloneembedreportprint.” Even stranger, GPT could not seem to output these tokens. When prompted to repeat them, it would often respond in strange and unexpected ways, like “They’re not going to be happy about this” and “You’re a fucking idiot.” As a result, these unusual tokens have now been dubbed “glitch tokens.” Leaning further into our understanding of these systems, how might we use (or misuse) specific tokens in a diffusion model’s vocabulary to instigate unexpected results?

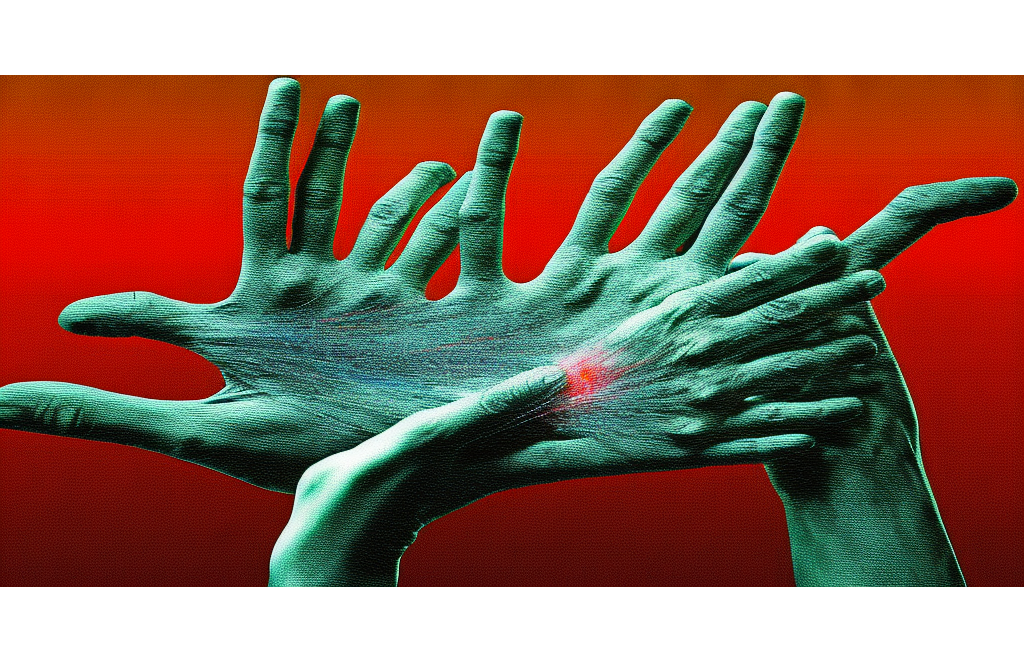

Anticipating the unexpected can be a tricky practice. When dealing with new and unfamiliar technology, it’s important for the glitch artist to dispel any preconceived notions of what a glitch should be. When I co-organized the first GLI.TC/H conference/festival in 2010 most of the glitch works shown were colorful, saturated pixelated blooms or moshes. But by the third iteration, GLI.TC/H 2112, one of the most popular types of glitches were black-and-white, unconstrained, jagged characters—a type of text-based glitch known as zalgo text, best exemplified by the social media hacks of Laimonas Zakas, aka Glitchr. As it turns out, glitching social media platforms results in an entirely different set of aesthetic artifacts than glitching image files. The same can be true for AI models. One incredibly common bug in many of the diffusion models was (and sometimes still is) nightmarish human hands. I prompted Dall-E to make “a human with three eyes,” but it produced an image of a human with two eyes and eight fingers. Not only did it fail at outputting the requested image, it decided to create an image that emphasized these hands, positioning them on either side of the generated person’s face. These sorts of glitches manifest the model’s inherent bias. The model’s training data likely contained much more information about human faces than on human hands. It would be a mistake to ascribe the bias solely to the machine. All technologies are biased, but this is because they are made by people and people are biased. A model’s bias is really a reflection of the individuals who designed the system as well as the individuals who collected the training data and the bias of all the individuals they scraped and mined that data from. But this “glitch” also points to another bias: that of the viewer. Safe to say most humans have ten fingers, but not all do. What does it say about our own bias if we categorize this image as “abnormal” and this moment as a “glitch”?

The technical complexity of AI systems can be intimidating. But it’s important to remember that this is as true for the programmers creating the systems as it is for the artists using them. What’s particular about AI is that these are the first algorithms that humans did not create themselves. They were “trained,” not “written,” and so we’re just as surprised by what they can do when they perform as intended as we are when they produce unexpected results. AI is the kind of platform-technology that will without question reshape our lives in very drastic ways, so the fact that even its creators are surprised as often as they are by these black boxes should give us pause.

The most popular examples of glitch art tend to have a dynamic, beautifully noisy and wonderfully colorful aesthetic, which is what initially draws so many of us to the works. But glitch art should not be defined by this aesthetic. As technology evolves, what it looks, sounds, feels, and smells like when it “glitches” will evolve along with it. The perspective-shifting insight that accompanies that unexpected moment, however, will not. Intentionally misusing technology in curious, playful, provocative, and strange ways is what defines the glitch ethic, and the off-kilter perspective on the system which results has never been more important.

Nick Briz is an artist, educator, and organizer based in Chicago.